The Fleischmann-Pons press conference was 30 years ago this month! I didn’t pay much attention to it at the time—I was, after all, in preschool—and then I heard hardly a word about cold fusion in college, or in physics grad school, or as a professional physicist. Finally, a few years ago, I was surprised to read that people were still researching cold fusion, now also known as LENR (Low Energy Nuclear Reactions). So I started reading about it. I immediately found sources making a strong-sounding argument based in theoretical physics that it cannot possibly exist, and other sources making a strong-sounding argument based in experiments that it does in fact exist. …Well, one of these two arguments is wrong!

This blog has been all about the theoretical physics case against cold fusion. The case is simple (…well, it’s simple if you have a strong background in theoretical physics!), but is it correct? Does it have any loopholes? Is the underlying theory uncertain? Nope! After countless hours of reading papers and doing calculations and writing blog posts, I find the theoretical case against cold fusion more convincing than ever. That leaves the other possibility: maybe the experiments purporting to see cold fusion are all wrong?! This is of course the mainstream scientific consensus on cold fusion, but the detailed story of exactly how and why the experiments are alleged to be wrong is a bit hard to find—mostly buried in obscure technical papers and websites. Here I’ll do my best to summarize and evaluate these arguments.

I’m not any particular authority, and I’m probably misrepresenting both the case for and the case against. Sorry! Bear with me. I plan to revise this post as I learn more. And please do follow the links, especially in Section 1, if you want to learn directly from the primary sources and cut out the middleman (me)!

1. Sources

Back in 1989-1991, when cold fusion was a Big Deal, there were dozens of high-profile chemists and physicists providing detailed, substantive criticisms of cold fusion claims. The critics won the day, mainstream science moved on, and so did those critics, who decided that they had better things to do with their time than to keep paying attention to the small group of people who kept on researching cold fusion. And thus the remaining cold fusion researchers wound up in a little bubble, isolated from the rest of the scientific community, where they have remained ever since, by and large speaking at their own conferences and publishing in their own journals.

Luckily it’s not entirely a bubble; some people outside the cold fusion community have continued to read and comment on cold fusion claims, even to the present day. By far the most prolific and prominent of these is the physical chemist Kirk Shanahan, an expert on metal hydrides—biography here. His cold fusion-related technical journal publications are:

- “A systematic error in mass flow calorimetry demonstrated” (2002), in which he reanalyzes the calorimetry results described this paper by Edmund Storms and suggests that a non-nuclear explanation he calls “Calibration Constant Shift” (CCS, Section 3 below) is compatible with the data.

- Comments on “Thermal behavior of polarized Pd/D electrodes prepared by co-deposition” (2005), a response to this paper by Szpak et al. The Szpak paper had claimed to rule out CCS, but Shanahan argued that they were misunderstanding CCS, and in fact CCS explained the Szpak data beautifully.

- …then Ed Storms replied to these two Shanahan papers here, prompting Shanahan to reply back with Reply to “Comment on papers by K. Shanahan that propose to explain anomalous heat generated by cold fusion”, E. Storms, Thermochim. Acta, 2006 (2006). I can’t find a response back by Storms … and in one of Storms’s books later on, there’s a discussion of this exchange that does not even acknowledge the existence of Shanahan’s reply, let alone answer it.

- Comments on “A new look at low-energy nuclear reaction research” (2010), a response to this paper. Here Shanahan addresses both calorimetry (Section 3 below) and evidence for nuclear effects (Section 4). Shanahan’s comment was in turn was responded to by pro-cold-fusion folks here. Shanahan was not allowed to re-reply in the journal, but he has a point-by-point rebuttal here. More on this below.

Much more of Shanahan’s arguments and responses to criticism can be found at sci.physics.fusion, lenr-forum, and his Wikipedia talk page and archives, among other places. See also this section mostly written by Shanahan, from an old version of the Wikipedia article.

OK so why should we believe Kirk Shanahan over the cold fusion researchers whose experimental results he is disputing? Well, we shouldn’t believe anything, we should evaluate claims and counter-claims on their merits! That’s my goal too. Why do I tend to believe Shanahan? Not because of my fierce tribal loyalty to fellow UC Berkeley PhDs (Go Bears!), but rather because I keep checking, and I keep concluding that he is correct and that his critics are incorrect. While I’m indebted to Shanahan for many of the insights in this page, I endeavored to carefully check for myself everything I wrote, and I stand behind it.

2. General comments

2.1 Object-level vs meta-level arguments

- Example object-level argument: “I believe that cold fusion is real because Mosier-Boss et al. found triple-tracks in CR-39 which are characteristic of fast neutrons.”

- Example meta-level argument: “I believe that cold fusion is real because Martin Fleischmann thought it was real, and he studied it for years, and he is a world-renowned electrochemist, not an incompetent moron.”

Both of these categories of argument are in common use by cold fusion advocates, and both deserve careful consideration and response. Following the usual best practices, I’m going to tackle the object-level arguments first (sections 3 & 4), and the meta-level arguments afterwards (section 5).

2.2 H is not a “control experiment” for D

This one comes up all the time, so I might as well address it first.

H (hydrogen) and D (deuterium) are two isotopes of hydrogen. In oversimplified high school chemistry, the story is that isotopes of the same atomic species have roughly the same chemical properties, and only behave differently in nuclear reactions. Going off this simple story, cold fusion proponents often do identical experiments with light water (H₂O) vs heavy water (D₂O), or with hydrogen gas (H₂) vs deuterium gas (D₂). The results are often different, and this is supposed to prove that nuclear reactions are happening.

This simple story from high school chemistry is a good way to think about some pairs of isotopes, like uranium-238 versus uranium-235, whose masses are 1% different. But it is not a good way to think about H versus D, whose masses are 100% different! In fact, H and D differ substantially in both their nuclear and their chemical properties.

So for example: H & D have 10% different enthalpy of absorption into palladium and >30% different diffusion coefficients in palladium. (In fact, palladium is sometimes used to separate hydrogen isotopes!) Light water and heavy water have, among other things, 25% different viscosity, 60mV different thermoneutral voltages for electrolysis, and 10% different heat capacity per volume.

I could go on, but you get the idea: there are many factors that might account for different results between D and H experiments, even if there are no nuclear reactions going on. It’s still a great idea to do both D experiments and H experiments, but neither is a “control” for the other in the usual sense. They’re just two different experiments.

3. Excess heat

“Excess heat”—more heat energy flowing out of a system than can be accounted for by non-nuclear effects—is the oldest, the most famous, and the most commonly-cited evidence for the existence of cold fusion. But is it real, or a measurement error?

First, some background. According to cold fusion proponents the classic cold fusion experiment goes something like this. You have an electrolysis cell containing heavy water D₂O, through which you’re running an electric current between a palladium cathode and a platinum anode, thus splitting D₂O into D₂ and O₂ gas. You do this for many weeks, with nothing much happening. During this time the palladium “loads” (fills with deuterium) and “conditions” (changes in mysterious ways that nobody understands). Then finally, 80% of the time … nothing happens ever. But the other 20% of the time, the excess heat starts, and continues for many more weeks, often sporadically starting and stopping. When you multiply the measured heat power output (typically <1 watt) by the many weeks or months during which it was present, you get a large amount of energy (typically >1 Megajoule), much more than could be caused by exothermic chemical reactions in the cell. If the excess heat is not from chemical energy, then by process of elimination, it must be from nuclear energy.

Cold fusion skeptics agree with the proponents up to the point where “excess heat starts”. The skeptics say instead: “apparent excess heat starts”—i.e., the chemical processes going on in the cell change, and this change is wrongly interpreted as excess heat.

Exactly how do the chemical processes change, and exactly how is that wrongly interpreted as excess heat? Below (Sections 3.1-3.4) I’ll focus on a particular set of mechanisms proposed by Kirk Shanahan, because I think he has convincingly shown that these errors can account for most or all of the excess heat observations claimed by cold fusion proponents. In Section 3.5, for completeness, I’ll mention a couple other potential error mechanisms that might pop up in certain cases.

3.1 “Calibration Constant Shift” (CCS)

Excess heat experiments use calorimeters, instruments that measure heat flow. When you calibrate a calorimeter (like any other instrument), you’re finding a relationship between the raw instrument output (e.g. a voltage measurement) and the quantity that the instrument is purporting to measure (i.e., the net amount of heat being generated in the cell). You do a calibration by, for example, creating a known amount of heat with a Joule heater and seeing what the instrument output is, and then turning that into a linear or non-linear relationship. Once that relationship is established, you use it in the other direction, inferring heat from the raw instrument output. However, if the calibration is not stable over time, this procedure will eventually start giving you the wrong value for heat.

Why might a calibration shift? There are dozens of possible reasons, some more likely than others. Shanahan emphasizes one cause of calibration shift in particular: Calorimeter heat capture efficiency. No calorimeter captures 100% of the heat generated in the cell—there are always parasitic heat loss pathways (heat escapes through imperfect insulation, through wire feedthroughs, etc.). The calorimeter heat capture efficiency is the fraction of heat energy that exits the cell via the calorimeter, as opposed to sneaking out through a different part of the apparatus. Calorimetry measurement procedures implicitly correct for the fact that heat capture efficiency is <100%. If you over-correct for that fact, you get a spurious measurement of excess heat.

Example: Suppose that there are two locations within a cell:

- When heat is generated at location X, 99% of it exits through the calorimeter, and the other 1% escapes through a parasitic heat loss pathway.

- When heat is generated at location Y, 90% of it exits through the calorimeter, and the other 10% escapes through a parasitic heat loss pathway.

If you calibrate this calorimeter with a Joule heater at Y, and do a cold fusion experiment involving periods of heat generation at X, you’ll incorrectly conclude that there are periods of excess heat even if there aren’t. See why?

(Conversely, if you calibrate using a heater at Y and then create heat at X, you’ll get the opposite—lack of heat. More on that below.)

(X and Y don’t have to be different locations per se, they can also be different patterns of convective heat flow, different probabilities of bubbles sticking to a particular feedthrough where it changes the heat loss, etc. But different locations seems an especially likely culprit as discussed in Section 3.3 below.)

3.2 Unexpected In-Cell Combustion (UICC) [for open cells]

Besides CCS, there’s a second potential problem. The idea here is simple. In electrolytic cells, D₂ gas and O₂ gas are both present (they bubble off the two electrodes), and if the two come together in the presence of an ignition source (e.g. a catalytic surface), they can combine into D₂O, releasing heat. In an open electrolytic cell, the D₂ and O₂ are supposed to flow out of the cell without burning. If that’s what the experimenter assumes is happening, but if in fact some burning does occur in the cell, well there’s your excess heat source right there!

(This is an issue for open cells only. The opposite of an open cell is a closed cell, which does not allow gas out, but instead has a “recombination catalyst” in the cell to facilitate the D₂ + O₂ → D₂O reaction. Open and closed cells are both common in the cold fusion literature.)

Here’s an example of what this mis-measurement looks like, in the bigger picture. You have a cold fusion cell, designed to continually expend 10W of electrical power from the wall outlet. Before you start, you get out your pocket calculator and chemistry handbook, and find that out of those 10W, 5W are supposed to turn into heat, while the other 5W are supposed to go into splitting D₂O into D₂ + O₂ gas. OK, now you do the measurement. For the first month, your calculation is borne out, and you see the expected 5W of heat. But then, unbeknownst to you, some fraction of the D₂ + O₂ gas starts burning back into D₂O before escaping the cell, and you see 7W of heat (instead of the expected 5W) from the 10W of input electric power.

Now, if you were a cold fusion researcher, you would summarize all these numbers in the following statement: “Great success! I saw 2 watts of excess heat—20% of input power! It continued for 2 months, so that’s a whopping 10 megajoules! Now let’s start a company and scale it up!” Was the experiment actually successful? No, of course not, it was actually a creative way to waste electricity. Oh wait, not even that. It’s an unoriginal way to waste electricity, since perpetual-motion-machine crackpot Stanley Meyer was pointlessly splitting and recombining water way back in the 1970s!

Anyway, that’s “Unexpected In-Cell Combustion (UICC)”, a term I just made up. Shanahan often lumps UICC together with the previous section as a flavor of CCS, but I think that’s bad pedagogy. Why? Because UICC has nothing whatsoever to do with calorimeter calibration constants!

(UICC can also stand for “Underestimated In-Cell Combustion”, since sometimes the researchers admit that it might be happening, but try to downplay its magnitude. Shanahan has argued that this is the case in some of the original Fleischmann-Pons papers, for example.)

So that’s UICC, which would give a spurious excess heat measurement even for a hypothetical perfect calorimeter with 100% heat capture efficiency for the entire cell.

Can’t you rule out UICC by measuring outflowing gas volume? Yes, if it’s measured with sufficient accuracy, but the cold fusion researchers have apparently not been up to the task! The example I’m familiar with is the famous paper Szpak et al. 2004 (on which Shanahan commented). Szpak explicitly tried to address UICC. They calculated that 7.2 cm³ of D₂O should have been consumed assuming no UICC, and they measured 7.7 cm³, “which is within experimental error” of the 7.2 cm³ prediction.

…Did you catch the mistake? They answered the wrong question! This is textbook confirmation bias—being attentive to whether the data is compatible with your favored theory, and oblivious to whether it’s compatible with other theories too! OK sure, I’ll accept that this 7.7 cm³ measurement is compatible with the hypothesis that cold fusion is happening and there is no UICC. But is it also compatible with the hypothesis that UICC is to blame for all the excess heat? What D₂O measurement is predicted by the UICC hypothesis, and is that number within with the experimental error bars or not? Amazingly, the Szpak paper doesn’t answer this question!!

Side note #1: UICC in gas-loading & heat-after-death experiments Without getting into details, these are two types of cold fusion experiments where in theory, there should be no O₂ present, and therefore no UICC. In reality, don’t forget that O₂ could enter through a leak, or flow back through a gas vent valve, or be present from the start of the experiment, etc. So UICC could potentailly cause an error in these cases too. How big an error might it be? As always, experimenters need to answer that question, explain the relevant procedures, and show their data, if they want people to believe them.

Side note #2: Are we talking about electrochemical or non-electrochemical recombination? There are two flavors of recombination, electrochemical (where electrons transfer from an electrode to an oxygen atom) and non-electrochemical (plain old combustion of D₂ gas and O₂ gas anywhere in the cell). Shanahan only talks about the latter. What about the former? This is more commonly described as “Faraday efficiency below 100%”, and was famously proposed and demonstrated in 1995 by Shkedi here, back when Bose (yes Bose the audio company!) had a lavishly-funded cold fusion research program. As far as I understand, significant errors from Faraday efficiency probably do occur in some cold fusion papers, but only if the cold fusion experiment is using unusually low current. So following Shanahan, I’m just talking about non-electrochemical recombination here. I called it “combustion” in my “UICC” acronym to eliminate any possible confusion.

3.3 At-The-Electrode Recombination (ATER), a possible cause of both CCS & UICC

Where are we at so far? Cold fusion advocates frequently state that the excess heat signal is many times larger than the experimental error bars. But when you put realistic error bars on UICC (in the open cell case) and on the calorimeter calibration constants (in all cases), and propagate the errors through, you find that the error bars are much larger than they look, and the excess heat signal is often swallowed inside them. Thus the data is compatible with no excess heat. Getting this far doesn’t require understanding why CCS & UICC is happening in any detail. But Shanahan also offers a nice hypothesis for that too.

The typical cold fusion configuration involves a palladium cathode where D₂O → D₂ + O²⁻, and a platinum anode where D₂O → D⁺ + O₂ [the exact reactions vary depending on pH]. The bubbles of oxygen gas can travel from anode to cathode (details), where deuterium gas can enter the bubble, and then the bubble can ignite and explode thanks to the catalytic properties of the cathode surface. Shanahan calls this “At-The-Electrode Recombination” (ATER), and it seems to explain a lot:

- Why do cold fusion experiments require many hours of loading and conditioning before the excess heat signal appears? The hypothesis is that the cathode surface microstructure and composition has to be just right to enable the oxygen bubbles to stick to it, for the deuterium gas to enter the same bubble, and for the explosion to trigger. Shanahan calls this a “Special Active Surface State” (SASS). The days or weeks of loading and conditioning the palladium will gradually change the surface microstructure and composition, due to the accumulation of impurities, the stresses and strains and “dislocation loop punching” related to deuterium loading, and so on. Eventually, some fraction of the time, the surface will wind up in a SASS.

- Why do cold fusion “co-deposition” style experiments start producing excess heat immediately? Without getting into details, in these experiments the surface is quickly built up from scratch during the course of the experiment, so it could plausibly get the right microstructure and composition to be a SASS from the start. As part of this, Shanahan speculates that the co-deposition might create a dendritic surface structure that is unusually effective at grabbing and holding bubbles.

- What are the little flashes on the cathode that are sometimes seen with IR cameras? Those are the little D₂ + O₂ bubbles exploding!

3.4 CCS / UICC FAQ

Q: Is CCS / UICC falsifiable?

A: Yes, absolutely! The best way—and sorry if this sounds mean—would be to sell me a cold fusion power generator that can boil me a kettle of tea every morning without ever needing to be plugged into a power outlet. Failing that, if an experimentalist wanted to take CCS / UICC seriously, and evaluate whether it’s happening, there are obvious steps to take. I would want to see a table of all the calorimeter calibration results, with measurement procedure, realistic error bars, and timestamps. I would want to see calibration using multiple resistance heaters located in different parts of the apparatus, including the recombination catalyst and each electrode, and used at different times including both when excess heat is happening and when it isn’t. I would want to see an analysis of what the calorimeter heat capture efficiency is, how it varies, and how that amount of variation would affect the measurement results. I would want this analysis to also incorporate other aspects of the power budget, such as measurements of Faraday efficiency and extracted gas from open cells, again including realistic error bars on all quantities, and error propagation all the way up to the headline results. At the end of all that, I would want to see excess heat overwhelmingly larger than all the possible errors stacked together, and I would want to see this kind of analysis from more than one independent lab with quantitatively consistent results.

Q: …And has that happened?

A: Real cold fusion papers are nowhere close to meeting the criteria above, as far as I’ve seen. We’re lucky if a paper even pays lip service to the possibility of CCS / UICC. When they do, they seem to wildly misunderstand it. A great example is the 2010 Marwin et al. reply to Shanahan. They call it multiple times “random calibration constant shifts”. But systematic errors are not random! They list “six conclusions” of excess heat studies that they say would be “impossible to obtain” if CCS were the explanation. But if they had understood CCS, they would have had no problem explaining any of those six! (See the SASS discussion above.) They say “The [CCS hypothesis] can thus be shown quantitatively to fail in all cases of excess power reported in mass flow calorimeters.” (Emphasis in original.) But the original paper on CCS was a based a quantitative analysis of data from—you guessed it—a mass flow calorimeter! (Not to mention that much cold fusion data is from open-cell experiments, in which case we’re also talking about UICC, which has nothing to do with calorimeter type or quality!)

An exception that proves the rule: As far as I can tell, the Earth Tech folks seem to be extremely conscientious in their calorimetry methodology and analysis, much moreso than others—see their discussion of “MOAC, Mother Of All Calorimeters” and judge for yourself. Starting around 2004, following this rigorous protocol, they ran experiment after experiment (8 of them total!) trying to reproduce different excess heat results from the cold fusion literature. They put in great effort to make faithful replications, even working with the original authors, sourcing materials from the same vendors, and so on. How’d it go? “To date, our operating experience…has been singularly devoid of opportunity to observe real excess heat signals.” Well, how about that!?

Q: Cold fusion advocates say that the excess heat signal is way above the noise. So how can CCS / UICC be right?

A: CCS / UICC are systematic errors, not noise. They’re different! Systematic errors are related to accuracy, noise is related to precision. The real question is: Is the excess heat way above the total experimental uncertainty (of which noise is just one component)? The answer is: We don’t know, because cold fusion researchers are leaving out some of the sources of uncertainty!

Q: Why is it always “excess heat” and never “lack of heat”?

A: For UICC, it’s obvious. For CCS, the ATER hypothesis involves a heat source moving from the recombination catalyst, located in the gas head space of the cell, to the electrode, submerged in the fluid. Shanahan says the gas head space will almost always have lower heat capture efficiency than the electrode, because of parasitic heat loss pathways “such as penetrations of power leads and thermocouples”. That means excess heat, not lack of heat.

Q: What about “heat after death”?

A: “Heat after death” is a term describing electrochemical cold fusion cells remaining hot after the current turns off, even (allegedly) hot enough to boil off all the water in the cell. If we’re worried about calorimeter constant shift, the gradual evaporation of water turning a liquid cell into a gas cell is a horrifically bad case, and would certainly cause dramatic changes in calorimeter heat capture efficiency. Remember, there is a real heat source present: Over the course of days or weeks, deuterium atoms are gradually leaving the palladium, combining into deuterium gas, and potentially combusting with oxygen (which may be present due to a gas vent, or leaks, or leftovers from the electrolysis phase of the experiment). So mix together a real UICC heat source, and a CCS that leads to measuring the heat source wrong, and the unjustified assumption that all the water loss is evaporation rather than liquid-phase mist, and often a miscalculation of evaporative heat loss too … and you can easily get wildly inflated claims of excess heat. Also note that the most famous heat-after-death claim is Mizuno, a decades-old undocumented anecdote, not a properly-instrumented scientific measurement. Shanahan has deconstructed the Mizuno anecdote here and elsewhere.

Q: What about melting and explosions?

A: Cold fusion experiments tend to involve explosive mixtures of deuterium and oxygen gas, and the means to ignite it (both catalysts and electrical equipment). Is it any wonder than there are sometimes big explosions? The famous explosion reported in the original Fleischmann-Pons paper was likely of this type. According to Nathan Lewis: “My understanding…is that Pons’s son was there at the time, not Pons himself. I understand that someone turned the current off for a while. When that happens hydrogen naturally bubbles out of the palladium cathode, and creates a hazard of fire or explosion.” (It was nominally an open cell which would mitigate gas buildup, but gas outlet lines can become blocked in various circumstances.) Also, palladium is ductile, so an explosion can severely warp it in a way that might make it look like it partially melted, if you don’t have explosives experience—or at least so says Shanahan, who does.

For the small apparently-melted spots that sometimes show up on the periphery of a palladium electrode, Shanahan offers a nice possible mechanism here.

3.5 Other possible excess heat errors

I’m familiar with two other proposed systematic errors that might lead to spurious measurements of excess heat. First, the AC contribution to input electrical power can be underestimated. This is indirectly related to how bubbles on the electrodes nucleate and detach. Second, you can create unintentional heat pumps that, like a backwards refrigerator, cool the outside of the cell by moving heat to the inside of the cell. For more details on these, see Appendix.

I admit that these sound a bit fanciful, but they’re really grounded in thinking about how the cells are constructed, how the measurements are done, and what are the conditions in which excess heat appears.

Q: We have three explanations for excess heat now. It seems redundant! Is the heat overestimated, or is the input power underestimated, or is there an unintentional heat pump into the cell, or what?

A: Beats me! But it’s usually safe to assume that different experiments have different problems: we should not expect a “Grand Unified Theory of Cold Fusion Errors”. (“All happy families are alike; each unhappy family is unhappy in its own way.”) For what it’s worth, if I had to make a wild guess, I would say that CCS / UICC is the main problem in most experiments, input power measurement is maybe a problem sometimes, and heat pumping is always negligible. But the real point is, if someone claims they’ve seen excess heat far outside their experimental error bars, but the error bars don’t properly account for all of the possible error sources, well then I don’t believe them, and nobody else should either—not until we can buy a cold fusion water heater that doesn’t plug into the wall.

In fact, just like CCS / UICC above, the AC power and heat pump errors are almost universally ignored by cold fusion researchers, except for the very best and most conscientious cold fusion researchers … who get as far as paying lip service to these problems while misunderstanding them! Again, see appendix.

4. Nuclear claims: Transmutations, helium, neutrons, tritium, etc.

Outside of excess heat, we find a dizzying array of dozens if not hundreds of diverse types of evidence for cold fusion. I’ve split them into three subsections: transmutations, helium, and miscellany.

4.1 Transmutations

A major category of cold fusion claims involve transmutations—e.g., that cesium atoms are turning into praseodymium atoms or whatever. This happens at a very low level due to cosmic rays, trace radioactivity, and so on, Real transmutations (beyond background) would certainly be proof of nuclear activity. Unfortunately, I have yet to see a cold fusion transmutation claim that was not consistent with conventional chemistry, in particular contamination plus data misinterpretation.

4.1.1 Surfaces are a disgusting mess of contaminants

This is not only an important principle of personal hygiene, but also an important principle of practical chemistry! Under many circumstances, contaminant molecules accumulate on surfaces. So you can have a bulk gas or liquid which has impurities in such small concentration as to be completely undetectable … yet when you put a surface in it, it can get totally coated with the stuff!!

I have a bit of personal experience with this. In grad school we built a special laser spectroscopy system that could measure the topmost molecular layer of water at the air-water interface. Measure it, and what do you find? 100% of the water surface is surfactant impurities. So you clean all the glassware, and get the purest ingredients that money can buy, and work under a flow hood and keep everything sealed. What do you find now? 10% of the surface is surfactant impurities! So you go through exhausting multi-hour cleaning and purification procedures for anything that might touch anything that might touch the water. What do you find now? 1% of the surface is surfactant impurities! And so on…

Let’s put some numbers behind this idea (based on here), for an electrochemical example. (Gases, membranes, and other systems have similar problems.) Let’s say a researcher uses 50cm³ of 99.99999% pure water. That sounds pretty pure, doesn’t it? But it still has 1017 atoms of contaminant. If there is a chemical or electrochemical attraction that pulls these atoms onto the surface of, say, a 2×2 cm² electrode, they could coat that whole electrode 100 atomic layers thick!

This problem applies especially to surface-sensitive analytical techniques. Pretty much all the results discussed below (XPS, SIMS, EDX, etc.) are highly selective, measuring just the atoms at the outermost surface of the sample, which again is exactly where the impurities are concentrated.

As an example of the role of contamination, in this paper, Scott Little of Earth Tech (same folks who did the calorimetry discussed earlier) tried to get to the bottom of alleged transmutations in the “CETI RIFEX kit” (a scaled-down Patterson power cell). He traced iron to the beads, zinc to the o-rings, barium to the epoxy, etc. etc.

4.1.2 Misidentified peaks

Let’s start with SIMS (Secondary Ion Mass Spectrometry). You bombard the surface with fast ions, which then knock out (“sputter”) ions from the material, and a mass analyzer measures the mass of each ion. The most popular way to misinterpret SIMS data is the molecular ion problem. If SIMS is measuring a single atom, you can (usually) tell exactly what it is. But if it might be 2 or 3 or 4 atoms attached together, there are a lot of possibilities. SIMS instruments are sometimes set up to reduce the detection of molecular ions, but of course it won’t reduce it all the way to zero.

Here’s a fun SIMS example, from this paper, discussed by Shanahan here:

The authors understand the molecular ion problem; that’s why they labeled the 135 peak as “CsD”. By the same token, the 137 peak is obviously CsD₂, right? Not according to the authors!

Now, the authors are not such morons as to ignore this obvious answer; instead they pay lip service to it while dismissing it for far-fetched reasons. They say the 137 peak is higher than the 135 peak, whereas they expected more CsD than CsD₂. Well, perhaps their expectation was wrong?? They say the ratio of the 133 peak to 137 peak is inconsistent. Well, perhaps the samples are not all exactly the same?? They say instrument’s resolution is high enough to pick up the 0.02% mass difference between CsD₂ and the measured peak location. Well, no it isn’t, you can see that the peak width is higher than that. In fact, the authors use the especially wide ¹³³Cs peak as the reference point to label the x-axis! That introduces 0.1% uncertainty easily.

Also, if that peak is not CsD₂, then why isn’t there a second peak next to it which is the CsD₂??

Maybe the most famous cold fusion SIMS molecular ion error is this paper by Iwamura, in which Fig. 9 shows a SIMS peak at mass 96. The authors attribute it to molybdenum and make much of the fact that it has a different isotopic ratio than normal molybdenum. But then Takahaski et al. tried to reproduce it, and found that the peak was just a normal contaminant, triatomic sulfur, 3×32=96 (ref: Rothwell via Shanahan). Another group found a different suspect: ⁵⁶Fe+⁴⁰Ca. The possibilities are endless!

Other analytical techniques have the same problem with ambiguous peaks. Shanahan has dug up lots of good examples—EDX peaks identified as tin but which can also be sodium and patassium (known contaminants), or XPS peaks identified as praseodymium but which can also be copper (a known contaminant), and on and on. This latter example has an interesting twist ending: during this failed replication, it emerged that the apparent praseodymium was, at least in some cases, actually praseodymium after all!! But it was contamination! Traces of praseodymium were found all over the lab. Apparently they may have bought some praseodymium as a reference to check their measurements against. Talk about a self-fulfilling prophecy!

As above, in some cases, the authors neglect these alternate peak interpretations altogether, and in other cases, the authors pay lip service to it, then neglect it for far-fetched reasons, such as comparing to “controls” that are not really controls (cf. the H vs D discussion in section 2.2).

4.1.3 Disappearing peaks

One aspect of some transmutation studies like this one by Iwamura et al. is that one peak disappears while another appears—which, I admit, feels like what one should expect from a real transmutation. According to the authors, cesium gradually disappears while praseodymium appears … and in this one, also barium disappears while samarium appears! In both cases the atomic number increases by 4. (Hey, if it’s just misidentified peaks and contaminants, how do we account for such a remarkable numerological coincidence? Good question! See Section 5.1 below.)

But the question for this section is: Why does one peak disappear at the same time as another peak appears? There’s a nice answer to that! In XPS, SIMS, and other surface-sensitive measurements, only the outer few layers of atoms can be detected. If contaminants are getting plated on top of the original surface—and they surely are—then the original surface atoms’ signals will disappear, and moreover they’ll disappear at the same rate and same time as the new atoms’ signals appear!

4.2 Helium

The widespread belief in cold fusion circles is that the primary net reaction in deuterium-palladium cold fusion experiments is the conversion of deuterium atoms to helium-4 plus heat. (What’s the exact reaction pathway? No one knows. But could be worse: in nickel-hydrogen cold fusion experiments, the community cannot even agree on the net reaction, let alone the exact reaction pathway!)

So anyway, that immediately suggests to run deuterium-palladium cold fusion experiments and measure helium-4. And this has indeed been attempted.

Unfortunately, it’s not as simple as it sounds. Three experimental challenges:

- Helium-4 is present in the atmosphere at 5ppm, and within scientific laboratory buildings at potentially much higher levels. (Liquid helium is used for cryogenic measurements, and is usually allowed to boil off into the open air, from which it can spread through a building via the ventilation system.)

- Leak-proofing against helium-4 is hard, even harder than most other gases—those little mischievous atoms pass through all sorts of materials. In other words, it is possible for an apparatus to be leak-tight against nitrogen and argon and so on, but not leak-tight against helium-4. This makes it awfully hard to figure out whether or not the helium-4 you measure is or is not leaking in from the atmosphere.

- The expected amount of helium-4 created by these experiments is very low—1 watt of excess heat would theoretically make just 0.8μL of helium over the course of an entire day. So even an excruciatingly slow leak can drown out the measurement.

So, are cold fusion researchers up to the challenge? They say they are. But do we believe them?

One reason not to is the famous 2003 study by W. Brian Clarke et al. They took three samples from SRI (a well-respected institution in cold fusion circles) and did state-of-the-art gas detection. What did they find? You guessed it: Air leakage, air leakage, and air leakage respectively!

Was that Clarke’s fault or SRI’s fault? If the latter, did they clean up their act? As far as I can tell, nobody knows, and this experiment was never attempted again.

False claims of helium detection related to air leakage have a long history, dating back to the Paneth and Peters retraction (1926). They thought they had created helium from deuterium, but it turned out that the helium was a stowaway, originating in the atmosphere and hiding within the glass walls of the container they used. This didn’t look like an air leak, because only helium, not argon or other air constituents, would hide in the glass like that. And their “control experiments” couldn’t find that helium either, because the helium would only leave the glass walls in the presence of both heat and hydrogen! Very tricky—I would have been fooled too! I love this story; it beautifully illustrates many of the themes we’ve seen on this page.

4.2.1 Heat-helium correlation

One argument I’ve heard, mainly championed by cold fusion journalist Abd Lomax, goes something like this:

OK maybe the heat measurements could be erroneous, and OK maybe the helium measurements could be erroneous, but since experiments find that heat and helium are correlated (the cells with one also have the other), and that they have the ratio of “24 MeV per atom of Helium-4” predicted by nuclear physics, then they must both be real!

The right place to start here is by questioning the premise. Do experiments really find heat and helium to be correlated, and at the ratio 24 MeV? According to cold fusion skeptic Joshua Cude, here, it’s at least open to question:

Miles claimed a very weak correlation, in which results changed by an order of magnitude between interpretations, in which 4 control flasks also had helium, in which most of the controls were performed after the fact in a different experiment, when maybe their exclusion of helium had improved, and in which one experiment that produced substantial excess heat but no helium was simply ignored. And if the quantitative correlation with the amount of heat was weak when the glass flasks were used, it was essentially absent when the experiment improved and metal flasks were used. Tightening up the experiment made the results looser, not tighter.

Miles results were challenged in the refereed literature, and after that no quantitative correlation met the modest standard of peer review.

Indeed, 4 years after Miles, Gozzi published a careful helium study under peer review and admitted that “the low levels of 4He do not give the necessary confidence to state definitely that we are dealing with the fusion of deuterons to give 4He”. After that his experimental activity in the field seems to have stopped.

And of the other (mainly unpublished) groups Storms cites that reproduced Miles, two groups (Chien and Botta) did not measure heat, and so could not have observed a correlation; two groups (Aoki and Takahashi) report results that suggest an anti-correlation; another group (Luch) has continued experiments until recently, but stopped reporting helium; two groups (Arata and DeNinno) do not claim a quantitative correlation, but in one case (Arata) the helium levels seem orders of magnitude too low to account for the heat, although extracting information from his papers is difficult, and in the other (DeNinno) the helium level is an order of magnitude too high.

The only results since Miles that Storms has deemed worthwhile (i.e. cherry-picked) to calculate energy correlation come from McKubre’s unrefereed experiments, which include experiments described in the 1998 EPRI report, where McKubre himself is initially negative about, saying “it has not been possible to address directly the issue of heat-commensurable nuclear product generation”. His confidence in the results seems to have grown since then, but Krivit claims to show (with considerable evidence) that he manipulated the data to support his thesis.

This is the sort of evidence you regard as confirmation. This is what passes for conclusive in the field of cold fusion. This is good enough that no measurements of helium-heat in the last decade entered Storms’ calculations.

(The allegation that McKubre manipulated data to get the 24MeV number is this link.) Now, I’m still working my way through all these papers to confirm that this scathing little literature review here is correct. But even if it’s wrong—even if heat and helium are correlated—so what? This comes back to a point raised above, the difference between noise and systematic error. Sure, two independent random noise sources will not be correlated. But it’s entirely possible for two systematic errors to be correlated!!

For example: At-The-Electrode-Recombination (section 3.3 above) could cause both heat (as discussed above) and helium (by air in-leakage caused by little vibrations / shock waves [and yes vibrations have been measured], and/or the stresses and strains associated with a change in heat sources).

The 24MeV number seems to come first and foremost from a large helping of publication bias (Storms here mentions half a dozen studies that have measured both heat and helium without reporting the ratio). This is then further refined by cherry-picking among the published studies, emphasizing the ones that are close to 24MeV, while ignoring the ones off by a factor of 30, for example. A further step beyond publication bias and cherry-picking is experimenter bias (cf. Section 5.2 below). Experimenter bias doesn’t have to be as extreme as the alleged outright data manipulation. It can be utterly innocuous. It can happen to careful, honest, and ethical researchers. It can even happen to you. You start doing measurements. You notice that you’re getting a ratio higher than 24MeV, and therefore you study your apparatus looking for places that helium might be stuck and not getting to the helium detector, and you study your data processing procedure looking for ways that you might have accidentally forgot to add in part of the helium signal. Or conversely, you notice that you’re getting a ratio lower than 24MeV, and therefore you study your apparatus looking for places where there might be helium in-leakage or heat losses, etc. If you do this carefully and honestly, then every step of this process is legitimate experiment debugging, and no one would call it “unethical data manipulation”. But the effect is the same: to steer your measurement result towards the expected 24MeV, regardless of objective reality.

4.3 Miscellany

4.3.1 CR-39 radiation detectors

A series of experiments that were widely publicized starting around 2007 involved CR-39 radiation detectors, which indicated (allegedly) fast neutrons coming out of cold fusion experiments. When neutrons or other particles go through CR-39, they induce chemical changes that, upon etching the CR-39, leave pits visible with a microscope. Unfortunately, lots of other things create pits in CR-39 too! The discussion in Shanahan 2010 is nice and readable and comprehensive, and I won’t try to summarize it here. (See also the Earth Tech CR-39 experiments following up their failed replication of these results.)

The 2010 reply to Shanahan dismisses these hypotheses using the tactic we’ve see again and again: purporting to rule out non-nuclear explanations via Control-Experiments-That-Are-Not-Really-Control-Experiments.

I won’t ruin the fun this time: I’ll let readers try this one at home. Take a look at the so-called “exhaustive series of control experiments that showed that the tracks were not due to radioactive contamination of the cell components nor were they due to mechanical or chemical damage”. It’s Table 1 here. Now sit down with a pen and paper, and try to think of a radioactive contamination, chemical, or mechanical damage mechanism that none of these “control experiments” has ruled out.

(Hint: There is more than one right answer!!)

4.3.2 Everything else

There have been so many different reports of so many different things—X-rays, gamma-rays, helium-3, tritium, you name it—and detected in so many different ways, that I can’t hope to go through them all in detail. I’ll just say that the general pattern, as far as I can tell, is unreplicated results barely above the limit of detectability, or detected in an ambiguous way. For example, X-rays can be detected by the way they fog up film, but according to Shanahan, heat can fog up film too, as can chemical exposure (indirectly via hypering). As for tritium, one method of tritium detection gets false positives from dissolved palladium and suspended nanoparticles, while another method of tritium detection gets false positives from water. You get the idea.

5. Meta-level considerations

As mentioned above, a lot of the debate surrounding cold fusion experiments are not in the nitty-gritty details of particular experimental techniques; they come instead from big-picture, qualitative thinking about the situation-as-a-whole. I’ll address a few aspects that I think are important to thinking clearly about this topic.

5.1 What to expect when you read the debunking of a field devoted to a non-existent phenomenon

I copy a nice discussion from here.

Suppose you’re talking to one of those ancient-Atlantean secrets-of-the-Pyramids people. They give you various pieces of evidence for their latest crazy theory, such as (and all of these are true):

1. The latitude of the Great Pyramid matches the speed of light in a vacuum to five decimal places.

2. Famous prophet Edgar Cayce, who predicted a lot of stuff with uncanny accuracy, said he had seen ancient Atlanteans building the Pyramid in a vision.

3. There are hieroglyphs near the pyramid that look a lot like pictures of helicopters.

4. In his dialogue Critias, Plato relayed a tradition of secret knowledge describing a 9,000-year-old Atlantean civilization.

5. The Egyptian pyramids look a lot like the Mesoamerican pyramids, and the Mesoamerican name for the ancient home of civilization is “Aztlan”

6. There’s an underwater road in the Caribbean, whose discovery Edgar Cayce predicted, and which he said was built by Atlantis

7. There are underwater pyramids near the island of Yonaguni.

8. The Sphinx has apparent signs of water erosion, which would mean it has to be more than 10,000 years old.

She asks you, the reasonable and well-educated supporter of the archaeological consensus, to explain these facts. After looking through the literature, you come up with the following:

1. This is just a weird coincidence.

2. Prophecies have so many degrees of freedom that anyone who gets even a little lucky can sound “uncannily accurate”, and this is probably just what happened with Cayce, so who cares what he thinks?

3. Lots of things look like helicopters, so whatever.

4. Plato was probably lying, or maybe speaking in metaphors.

5. There are only so many ways to build big stone things, and “pyramid” is a natural form. The “Atlantis/Atzlan” thing is probably a coincidence.

6. Those are probably just rocks in the shape of a road, and Edgar Cayce just got lucky.

7. Those are probably just rocks in the shape of pyramids. But if they do turn out to be real, that area was submerged pretty recently under the consensus understanding of geology, so they might also just be pyramids built by a perfectly normal non-Atlantean civilization.

8. We still don’t understand everything about erosion, and there could be some reason why an object less than 10,000 years old could have erosion patterns typical of older objects.

I want you to read those last eight points from the view of an Atlantis believer, and realize that they sound really weaselly. They’re all “Yeah, but that’s probably a coincidence”, and “Look, we don’t know exactly why this thing happened, but it’s probably not Atlantis, so shut up.”

This is the natural pattern you get when challenging a false theory. The theory was built out of random noise and ad hoc misinterpretations, so the refutation will have to be “every one of your multiple superficially plausible points is random noise, or else it’s a misinterpretation for a different reason”.

If you believe in Atlantis, then each of the seven facts being true provides “context” in which to interpret the last one. Plato said there was an Atlantis that sunk underneath the sea, so of course we should explain the mysterious undersea ruins in that context. The logic is flawless, it’s just that you’re wrong about everything.

So this is the “null hypothesis” of what to expect if there’s no such thing as cold fusion. By now there are probably ≈1000 person-years of experimental data created by cold fusion researchers. In such a huge mountain of data, there is bound to be lots of “random noise and ad hoc misinterpretations” that happen to line up remarkably with researchers’ prior expectations about cold fusion. The question is not “Are there results that seems to provide evidence for cold fusion?”, but rather “Is there much more evidence for cold fusion than could plausibly be filtered out of 1000 person-years of random noise, misinterpretations, experimental errors, bias, occasional fraud, gross incompetence, weird equipment malfunctions, etc.?”

5.2 So…do you think the cold fusion researchers are “lying, or lunatics, or horribly incompetent?”

[source of quote] No no no! None of the above!!

Start with fraud and lying. There is undoubtedly some falsified data sprinkled in the cold fusion literature, just as in every other field, and we are wise to keep that in mind. For example, if we can successfully explain away 95% of cold fusion papers as being experimental errors, then we should consider very carefully whether the remaining 5% of papers might just be fabricated data—which again is something that happens in every field and is rarely caught.

That said, I do NOT think fraud is a primary explanation underlying the cold fusion technical literature. Why do I think that? Among other things, if cold fusion experimentalists were willing to make stuff up, then they would have made up better and more convincing data than what we actually have!!

(I’m just talking about the technical literature here, not the business side of cold fusion, where high-profile fraud accusations are frequently in the news.)

As for incompetence, yes, if mainstream science is correct that cold fusion is the study of a non-existent phenomenon, then the obvious implication is that the people studying it are inadequate in a trait, let’s call it “self-critical fastidiousness”, comprising the ability and desire to apply rigorous analysis to one’s work, treating one’s knowledge and beliefs as uncertain, and aggressively searching for flaws in them to the point of paranoia. Lacking self-critical fastidiousness does not make someone a generally incompetent person, and certainly doesn’t make them a moron. Yes, there are morons in the cold fusion community, just like every other community, but I would be very uncomfortable calling Martin Fleischmann a moron, or a few other people whose papers I’ve read. Again, the question is not whether the field is full of morons, but whether it’s empty of scientists with simultaneously high levels of intelligence, subject-matter expertise, and self-critical fastidiousness, which would happen if people meeting that description have all exited the field long ago, or not entered in the first place.

And how about Martin Fleischmann anyway? Does he deserve his reputation as a world-renowned electrochemist? Absolutely. Did he get that reputation by being overflowing with self-critical fastidiousness, or for other things like intelligence, subject-matter-expertise, having a good nose for where to find important new phenomena, being a good manager of students and postdocs, etc. etc.? Now, I never met the guy, but there seems to be some evidence that he got his reputation for those “other things”, not for heroic levels of self-critical fastidiousness. For example, Martin Fleischmann’s celebrated 1974 paper discovering Surface-Enhanced Raman Spectroscopy attributes the effect to the increased electrode surface area, which supports more adsorbed molecules and hence a higher signal. They expressed no doubts or uncertainties when they said this, but they hadn’t actually checked that it was true, or else they would have quickly discovered that it wasn’t. No matter: Fleischmann is celebrated for discovering the phenomenon, and few remember the error. Later on, the famous original Fleischmann-Pons-Hawkins cold fusion paper claimed not only excess heat (a claim that the cold fusion community still believes) but also the detection of neutrons (a claim that was quickly and universally accepted as erroneous).

5.2.1 What does experimenter bias look like, in the hard sciences?

We’ve all heard of Clever Hans in psychology, and we’ve all heard of the placebo effect in medicine, but not everyone has a clear, concrete picture of exactly how experimenter bias can lead people astray in the hard sciences. So here are a couple examples.

Imagine you’re an experimentalist, and your apparatus spits out a number on a display, and you expect it to be around 50. You set up the experiment, and the display says “0.0002”. That’s not right! So you look for problems in the experimental setup—leaks, bad electrical connections, malfunctioning test equipment, whatever—and you find one! And you fix it! And now the display says “46”, a sensible number close to what you expected. Everything about the apparatus now appears to be working. Does that mean there are no other major problems? No, maybe your expectations were wrong! Maybe the display would be “820” if you really fixed all the problems in the apparatus. But once the experiment seems to be working properly, are you going to keep checking and double-checking every last solder joint? Probably not; at this point there’s a very strong temptation to just take the measurements and publish the paper.

Another example: I believe theory T (e.g. cold fusion), and theory T makes prediction P. I take data looking for P, and I do actually see P! There’s a strong temptation to stop at this point, publish P, and say that this offers strong evidence of T. This is of course fallacious; have I considered that maybe we should expect to find P even if T is false? This is classic confirmation bias—it’s the Wason selection task. We’ve seen this over and over in the sections above: Cold fusion predicts something, and that thing is in fact observed, but conventional chemistry can explain the same observation.

Thanks to dynamics like these, it’s common for experiments to give erroneous results, or erroneously-interpreted results, that just so happen to match the experimenter’s prior expectation. This happens by default unless the experimenter is overflowing with self-critical fastidiousness, in addition to intelligence and subject-matter expertise.

(If you haven’t read Langmuir’s famous talk on pathological science, you should! It’s a nice take on these exact issues.)

5.3. Reproducibility: Is it still a problem in cold fusion, and if so, what should we infer?

A 100% reproducible effect is one where any person with the skill and resources can follow a written procedure, and all these people get the same results. An effect with 100% yield is different—that means a single person can follow the same procedure multiple times, and all these samples show the same results.

Both low yield and low reproducibility are problems that have plagued cold fusion since it began. Yield of Fleischmann-Pons-style elctrochemical cold fusion cells is typically 20%, and of course it was reproducibility problems that destroyed the field’s reputation in 1989-1991.

Advocates claim that progress has been made on yield, at least in certain types of cold fusion experiments. But reproducibility is still a huge problem. I quote Michael McKubre, a leading figure in cold fusion, in June 2018: “To my knowledge there is no written replicable procedure or protocol.” (ref) The track record on reproducibility is terrible—8 failed replications by Earth Tech, NRL failing to replicate MHI, failed DTRA effort to replicate Szpak, several failed replications by a Google-funded consortium, and on and on.

What should we infer from the persistent reproducibility problems? Cold fusion advocates—if they acknowledge that there’s a problem here at all—say two things.

- They say that reproducibility problems stem largely from the cathode material, and that—since no two materials are the same down to the last atom—this is natural and expected.

- They say “The most reproducible effect by its very nature is systematic error. Irreproducibility of results far from being a proof of non-existence argues more the contrary, and simply indicates that not all conditions critical to the effect are being adequately controlled.” (McKubre).

Now, if the materials were to blame, then the first thing cold fusion researchers would do is to systematically work on the materials science and engineering required to diagnose and solve the problem—what cathode annealing schedule, crystallinity, composition, etc. are correlated with the excess heat? In fact, they’ve been doing this exact thing for years! Way back in the early 1990s, for example, Bose “spent unlimited resources to have custom lots of palladium and palladium/silver manufactured for them according to successful researchers specifications; had single-crystal palladium cathodes custom grown; palladium grain size ranged from a few microns to single crystal…” (ref). This research effort has led nowhere; cold fusion researchers talk about “magic materials” to this day.

As for point 2 above, I cannot disagree more! Remember the most important fact about scientific reproduction: Nobody tries to reproduce every aspect of an experiment. Nobody tries to reproduce the color of the voltmeter! Nobody tries to reproduce what the technician ate for lunch! Instead, reproduction efforts are inevitably focused only on the aspects of the experiment that the researchers believe to be pertinent to the effect being measured. Thus, reproducibility problems are a sign that the experimenters are fundamentally misunderstanding the nature of the effect that they’re looking at, which leads them to document the wrong parts of the apparatus, the wrong aspects of the procedure, etc.

For example, the Shanahan ATER (At-The-Electrode Recombination, Section 3.3 above) hypothesis puts a lot of emphasis on whether and how O₂ bubbles flow from the anode to the cathode, which in turn depend very sensitively on the cell geometry, heat convection, stirring procedures, and so on. However, according to the nuclear hypothesis, these things are all totally irrelevant. Naturally, a researcher who believes the nuclear hypothesis is unlikely to put rigorous effort into exhaustively documenting these aspects of the setup. And thus, if ATER is what’s really happening, the next lab to try following that documented procedure may well fail.

I’ve been talking about reproducibility in this section, but pretty much the same comments apply to cold fusion’s very limited progress on yield, controllability (ability to turn the effect on and off reliably), and scale-up (ability to make the effect 10× or 100× stronger), again despite 30 years of effort on all these fronts.

6. Conclusion

Suppose there’s no such thing as cold fusion. As in section 5.1, imagine taking 1000 person-years of random noise, misinterpretations, experimental errors, bias, occasional fraud, gross incompetence, weird equipment malfunctions, etc., and then passing it through a filter that amplifies results to the extent that they conform to expectations about cold fusion. Of course a lot of stuff will get through the filter, and some of that stuff, by itself, would be pretty convincing. A cesium peak disappeared at the same time as a praseodymium peak appeared! And, a barium peak disappeared at the same time as a samarium peak appeared! Wow! Aren’t things like these such remarkable coincidences that they can’t really be coincidences at all? Taken by themselves, it seems that way. But in the context of this filtering process, no they don’t!

Instead, I urge readers to take a step back and look at the bigger picture.

We’re now at 30 years of cold fusion research.

The magnitude of the effect, both relative and absolute, is pretty much the same as it was 30 years ago, and still insufficient for the apparatus to power itself, let alone power anything else.

Cold fusion advocates still make much of isolated, un-reproduced results from decades ago, like the undocumented Mizuno heat-after-death demonstration, or the Fleischmann-Pons explosion that happened when the experts were not in the lab watching. Shouldn’t the examples be getting better and better?

Reproducibility, yield, controllability, and scale-up are all more-or-less just as much of a problem today as they were 30 years ago, which all suggest that the researchers are measuring a fundamentally different kind of effect than they think (Section 5.3)—consistent with researchers who think they’re studying a nuclear effect, but are actually studying various chemical phenomena and systematic errors.

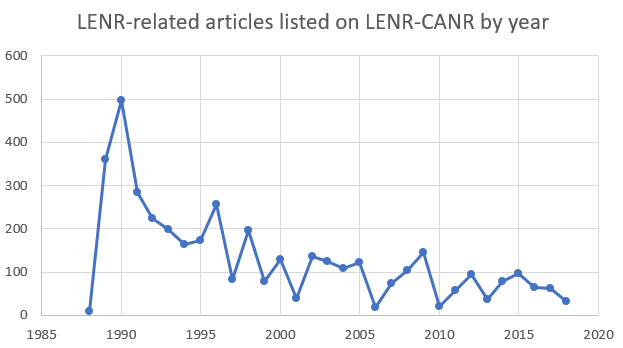

[UPDATE: Please ignore the rightmost point of this graph above, it doesn’t include the full year.] The amount of cold fusion research is shrinking towards zero. The US Navy, DARPA, NASA, and SRI are among the many fine institutions that used to support cold fusion research but no longer do. Look at the website of any of the top 100 physics departments or top 100 chemistry departments in the world. How many advertise their cold fusion research programs? Cold fusion conferences and journals are dominated by the same people year after year, with few new faces.

Cold fusion advocates say: “If only people were made aware of cold fusion research, they would be convinced!” But I say: Don’t cold fusion advocates have friends? Colleagues? Why isn’t it spreading by word of mouth? The answer is, it isn’t spreading because of countless little stories you’ll never hear, where an individual subject-matter-expert does look at the evidence, doesn’t believe it, and chooses not to spread those ideas further.

Cold fusion advocates say they don’t have the money or institutional support to make progress. The premise is questionable—I’ve heard that $500M has been spent on cold fusion, funded by both governments and companies. But still we can ask: Why isn’t there more money and more institutional support? Grants come from grant committees made of scientists, brought together by grant-giving institutions led by scientists. So we’re back to the previous paragraph. If the case for cold fusion were convincing to subject-matter-experts, then grassroots support for cold fusion would spread among scientists, growing exponentially, and it would soon bubble up to successful grants and other forms of institutional support, just as it has for every new scientific idea ever.

Cold fusion advocates say that the nuclear physics community has a conflict of interest, jealously guarding their plasma fusion research funding. First of all, it’s absurd to think that typical nuclear physicist would get the most benefit from defending their tiny slice of the plasma fusion research budget, compared to being on the ground floor of a revolution that could upend the trillion-dollar-a-year global energy market. Just look at the speed and quantity of money that poured into cold fusion research immediately following its announcement 30 years ago. Second of all, other stakeholders, like the community of mainstream hydrogen-metal chemists, have nothing to lose and everything to gain from cold fusion, but they remain just as skeptical as the nuclear physicists. See, for example, that time Kirk Shanahan put up a poster about cold fusion at a Hydrogen-Metal Systems Gordon Research Conference.

Shanahan’s insight about CCS / UICC is now 17 years old. The failure of the cold fusion research community to correctly and convincingly incorporate that insight into their calorimetry best practices is maybe the most glaringly obvious of these many signs of dysfunction. I think a lot of the dysfunction comes from a defensiveness that makes cold fusion advocates loath to criticize themselves or each other, or to criticize even the most ridiculous claims from within the community. It also comes from the community’s widespread culture of explaining away failed replications—“oh well, it must be mysterious uncontrolled properties of the raw materials!”—which undermines the community’s ability to acknowledge and learn from mistakes, uncover fraud, and all those other essential parts of the scientific enterprise.

So at the end of the day, leaving aside the theory and just looking carefully at the experimental data by itself, I see no reason to believe that there are nuclear effects going on in any cold fusion experiment. All signs point to just another pathological science, albeit one with unusual longevity! And speaking of which, once again, Happy Pearl Anniversary To Cold Fusion / LENR!

you’re big problem is that you’re “uncomfortable calling Martin Fleichman a moron”. If [Steve — SNIP, I deleted most of this comment. Please provide relevant information or cogent arguments, not just attitude / posturing.]

LikeLike

People have been using calorimeters since at least 1845.

https://en.wikipedia.org/wiki/Mechanical_equivalent_of_heat

Shall we redo the last 175 years of calorimetry work because of this “new” “discovery” of CCS? Shall we declare that calorimetry measurements don’t count unless they’re backed up by a 500-page engineering report?

…Or a better idea: let’s all agree that CMNS pseudoskeptics like you are being insane and hypocritical.

LikeLike

Dear LURKER, this is a reasonable question.

First of all, putting error bars on calibration constants, and allowing for the calibration to be different in different zones and at different times … I don’t think that’s a radical new idea, I think it’s always been part of calorimetry best practices, when the measurement demands it!

Second of all, I know of no other application of calorimetry that demands the calorimeter performance that cold fusion experiments demand, e.g. many months of stability at the 1% level even if the heat source location or other conditions change. Why else would cold fusion researchers be pushing the state of the art of calorimetry? McKubre has a joke that he “worked hard to bring calorimetry into the 20th century, and yes I mean 20th” (or something like that). I mean, cold fusion researchers use voltmeters too, but they never talk about it, they just turn the voltmeter on and they measure the voltage and they write it down. Why don’t they treat their calorimeters like that? Because this is not a common routine easy calorimetry measurement!!

Third of all, re “500-page engineering report”, when I want someone to trust my measurements, I provide whatever information is required to support that. Some measurements are easy. Others really do require 500-page engineering reports. One time I wrote a 100-page report on the accuracy of a measurement…and that was really just to get it started! At the very minimum, all the calorimetry errors discussed herein have been published, and CCS / UICC in particular are known to be large enough to reverse the conclusions of at least some cold fusion calorimetry studies. So I think calorimetry experimenters are absolutely obligated to try to understand these errors, quantitatively bound how they apply to their own measurements, and document all this so that anyone can understand and double-check. And they should do this in however many pages it takes. And if they don’t do that, then I don’t believe their results, and you shouldn’t either, and they shouldn’t believe their own results either.

LikeLike

Easy for you to say from your armchair. Time and budget constraints are always the reality (thanks in part to people like you suppressing investment in CMNS).

LikeLike

If you do a measurement but don’t have time or money to rule out alternative explanations, then your measurement result is “inconclusive”, end of story.

If you set out to do an experiment, but you anticipate that the result will definitely be “inconclusive” because your budget does not allow the checking of alternative explanations … then why are you doing that experiment in the first place? It’s pointless. Maybe you should rethink your plans and find a more productive use of time and money.

For what it’s worth, I don’t accept the idea that nobody in 30 years and $500M (or whatever the number is) has had the time to do a calorimetry experiment with sufficient care to rule out all known alternative explanations and document why and how they did so. The “500-page engineering report” is just a thing you made up; I expect it would actually be much much less. Maybe 50-100 pages?

LikeLike

LURKER,

You are correct. Calorimetry has been around for a long time. But so has the idea that an incorrectly determined calibration constant (CC) will give you the wrong value. (In fact, it might have preceded the calorimetric work! It’s just math after all… Why do think F&P spent so much effort determining the ‘correct’ way to derive their heat transfer coefficients (CCs).) That’s all the CCS (Calibration Constant Shift) is. I reanalyzed Ed Storms’ data under the assumption of zero real excess heat and found the calibration constants so derived had an embedded systematic behavior (on top of the normal random variation one would expect). Why would a CC shift? Because the operating conditions of the experiment change. How could that happen? If there was a residual spatial dependence on the heat distribution, and if that distribution shifted, the CC would change, i.e. a CCS occurs. How could the steady state change? By the initiation of a new chemical process in the apparatus, such as at-the-electrode-recombination (or perhaps I should have used ‘combustion’ as Steve did, i.e. ATEC). The beauty of the ATEC explanation is that it also helps explain other anomalous features of the results.

And all of that with no challenge to calorimetry at all…

Kirk Shanahan

LikeLike

You forgot the most important problem on reproducibility.

And that is a working theory that have some predictions that can be tested.

How can we Expect LENR reproducibility when the explanatory theory has not been identified? And when theoretical physisists do not dear to think outside the box and come up with some bold ideas that can be tested?

Hagelstein have some interesting thoughts on theory. He is almost alone inThe field of theoretical cold fusion research. Look him up on youtube.

If you want to critizise you should start by explaining why you believe we have reached the end of science as long as we only can explain 3% of the universe ( the rest being “dark matter and dark energy”)

I wish we had less conformity in the field of physics and more outside the box thinkers. You refer to the present Paradigm, and how impossible it is, but of course we need to look outside.

But I am 100% that a right cold fusion theory one day will arrive and we get 100% reproducibility.

By the way, the work by SRI and McKubre proved a very good relationship between helium 4 and excess heat.

LikeLike

Dear Øystein, this is mainly a theory blog (apart from this post), and I’ve blogged extensively on Hagelstein’s theories, see https://coldfusionblog.net/category/spin-boson/ . I don’t think his theories are going to work for reasons suggested at https://coldfusionblog.net/2017/06/18/spin-boson-model-quick-update/

There’s an idea in pop culture that dark matter and dark energy prove that our current theoretical physics paradigm is woefully deficient and soon to be overturned, but this idea is totally off-base. Take dark matter. The standard model of particle physics has 37 elementary particles, i.e. the 37 we know about, and nothing in modern particle physics theory predicts that these 37 are a complete list. In fact, quite the contrary: modern particle physics theory offers very strong reasons to believe that this is not a complete list, that there are more we haven’t seen yet. Dark matter is presumably one (or more) of those extra particles. We’d all still like to know exactly what particle it is, but again, dark matter particles are a generic prediction of the current theoretical physics paradigm, not a threat to that paradigm. Dark energy likewise fits perfectly well into the modern theoretical physics paradigm, indeed Einstein put dark energy (aka cosmological constant) into the initial proposal for general relativity. Are physicists working on dark energy? Yes, because we expect that eventually the amount of dark energy will be a prediction (well, postdiction) coming out of the theory, rather than an arbitrary parameter we put into the theory. But it is already in there. By the way we should really be discussing this at https://coldfusionblog.net/2018/07/24/nope/

I discussed the SRI heat-helium results in the post. The story is murkier than you portray.

LikeLike

And by the way,

It came as a huge surprise to the physics community that thunderstorms could produce gamma ray bursts and even antimatter.

And no, it is not very reproducible, i.e. dont happen in every storm 😉

I believe physists are still scratching their heads to find the right theory here to be tested. What are the conditions required to get these Events…..

LikeLike

Oystein,

No one is arguing there aren’t things out there left to discover. What is being presented here in this blog (quite well I might add) is a discussion of whether what Fleischmann and Pons claimed, along with those who make related claims, is believable. The net conclusion Steve has come to is ‘No, not really.’, and he presents his arguments for that position for open discussion, as is the norm in scientific circles. Dragging thunderstorms, etc., into the argument is just a red herring tactic to distract from the fact that there is not compelling evidence for LENR, and that there are rational suggestions as to what might actually be happening to produce the observed results. In normal science, such a situation usually implies that the researchers in the field need to do more work, specifically targeted at eliminating one of the options so that we all end up being ‘forced’ by reasonableness to conclude the remaining explanation is correct. That usually takes demonstrating reproducibility and control. In 30 years this should have occurred, but it hasn’t. Again, this usually means the researchers are looking at the wrong things. All of this Steve points out.

What Steve may not have pointed out as clearly is that there does appear to have been a real discovery by F&P. They found something that gives apparent excess heat signals. I proposed what that might be. No nuclear reactions required. Cold fusion researchers to a man have ignored or denigrated that view. That is what labels them as pseudoscientists. A real scientist would not resort to irrational tactics to deal with a critic. And that’s why 30 years from now, the same thing will be going on. (Isn’t one definition of insanity doing the same thing over and over while expecting different results?)

Kirk Shanahan

LikeLike

Hi Steve,

I’ve discovered your nice blog, after a recent post on LENR-Forum, which provided the link to this interesting post about the CF controversy on the eve of its 30th anniversary.